Evaluation of English-Spanish machine translation

An article about machine translation was translated into Spanish by Google Translate (https://translate.google.com). In September 2009, professional translators evaluated the translation for fluency and for accuracy.

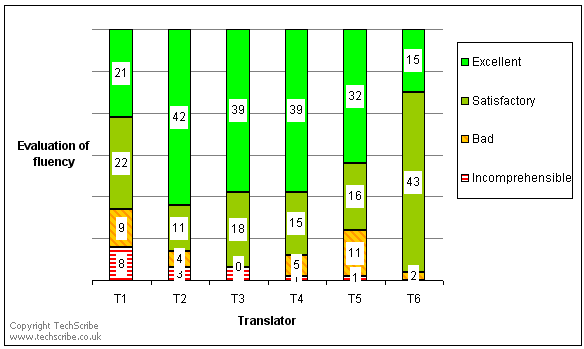

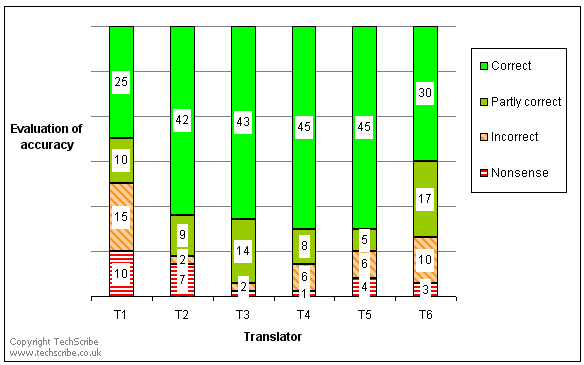

The results for fluency are in Figure 1. The results for accuracy are in Figure 2. Full details of the evaluation are in mt-evaluation-en-es.xls.

Figure 1. The fluency of Spanish machine translation of international English

Figure 2. The accuracy of Spanish machine translation of international English

Translators who evaluated the machine translations include the following people:

- T1, Roz Tarry (www.languagehelp.co.uk)

- T2, Lorena Guerra (www.proz.com/profile/96717)

- T4, María Elisa Pelletta

- T5, Juan Martín Fernández Rowda (www.proz.com/profile/36893)

- T6, Carina Avila

Notes about the results

We chose to evaluate Spanish because we expected to get satisfactory results. Possibly, for other languages, the quality of machine translation is low.

Usually, the translators agree in their evaluations. However, sometimes there are disagreements, for example, for the accuracy of sentence 44.

Sentence 38 is an example of what not to write. For accuracy, the translation is low quality.

The article contains technical terms such as 'phrasal verb'. Sometimes, a translation is not correct.

Some comments from the translators

"Overall, I can more or less understand the general idea and some of the detail. However, some of the key words, examples and sentences do not make sense, appear to be contradictory, do not follow on logically or are unclear, so the point being made is somewhat lost and the reader gets a bit confused. Therefore, as a result, doubt creeps in as to the overall understanding." T1.

"Overall meaning is understandable but there are some punctuation, grammar and stylistic mistakes. If taken as a whole the general impression is that it is artificial, transliteral and sounds odd for a Spanish reader as the sentences are not linked stylistically. It seems like one statement after another. It wouldn't be a translation you could charge a client. Albeit, it could be a good tool for translators if proofread and adequately expressed in target language as it would save a translator a lot of time." T3.

"Overall the meaning of the text is easy to understand. There are only a few sentences that don't make sense and one that could mislead the reader. Having said that, I believe that for most of them, the meaning can be elicited when read in context." T4.

"Overall, the fluency of the text is acceptable and allows the reader to roughly understand the text. Many gender and number agreement issues were spotted. I'd suggest that this is the main factor undermining fluency in this text. Use of determiners could be further improved. When dealing with the English phrasal verb examples, results are not good. This is due mainly because the translation needs to adapt those phrasal verbs instead of creating a literal translation (and this is a big challenge for any MT engine)…" T5.

"The translation can be understood. The main problems are collocations and agreement." T6.